AI Procedural Landscape Generator

Thesis project combining Stable Diffusion with a custom C++ OpenGL renderer for AI-guided terrain generation.

Overview

This project aims to simplify landscape generation by overcoming the learning curve and limitations of traditional tools like World Machine, Gaea, and node-based workflows such as Blender Geometry Nodes and Houdini. It combines:

- AI-Powered Generation: Uses Stable Diffusion with custom-trained LoRA models to generate heightmaps.

-

Real-Time Rendering: OpenGL-based C++ application for visualizing and rendering terrains.

-

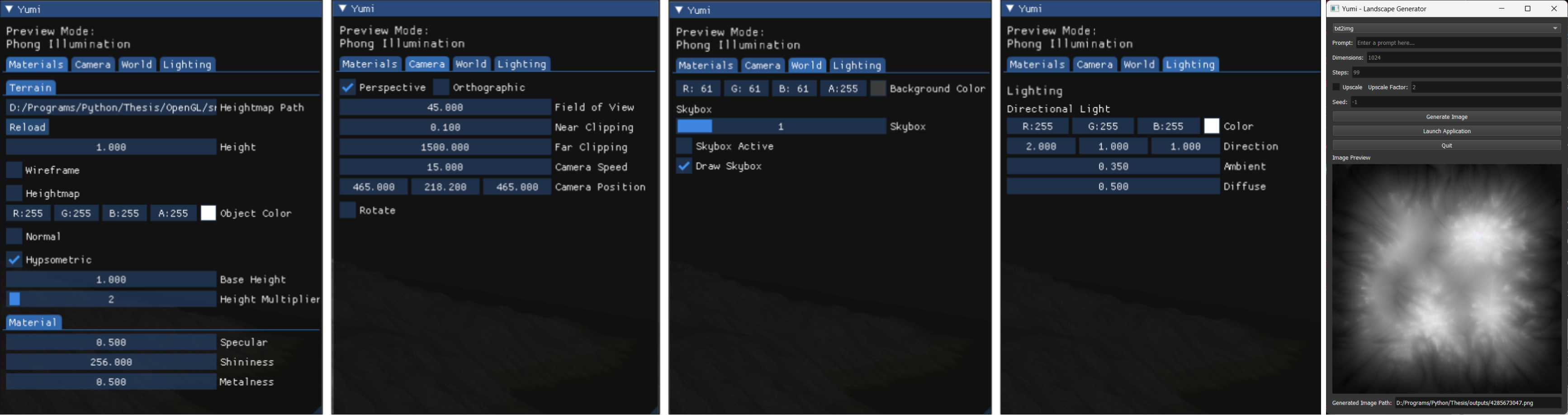

User-Friendly GUI: PyQt5 and ImGUI interface for easy interaction with the generation pipeline.

- Dataset Collection: Scripts for gathering and processing Digital Elevation Models (DEMs) from OpenTopography, custom data generated in Blender using Shaders, Geometry Nodes, and scripting.

The work was developed as part of my Master’s dissertation and targets real-world production constraints in games, VFX, and virtual environments.

Research Question

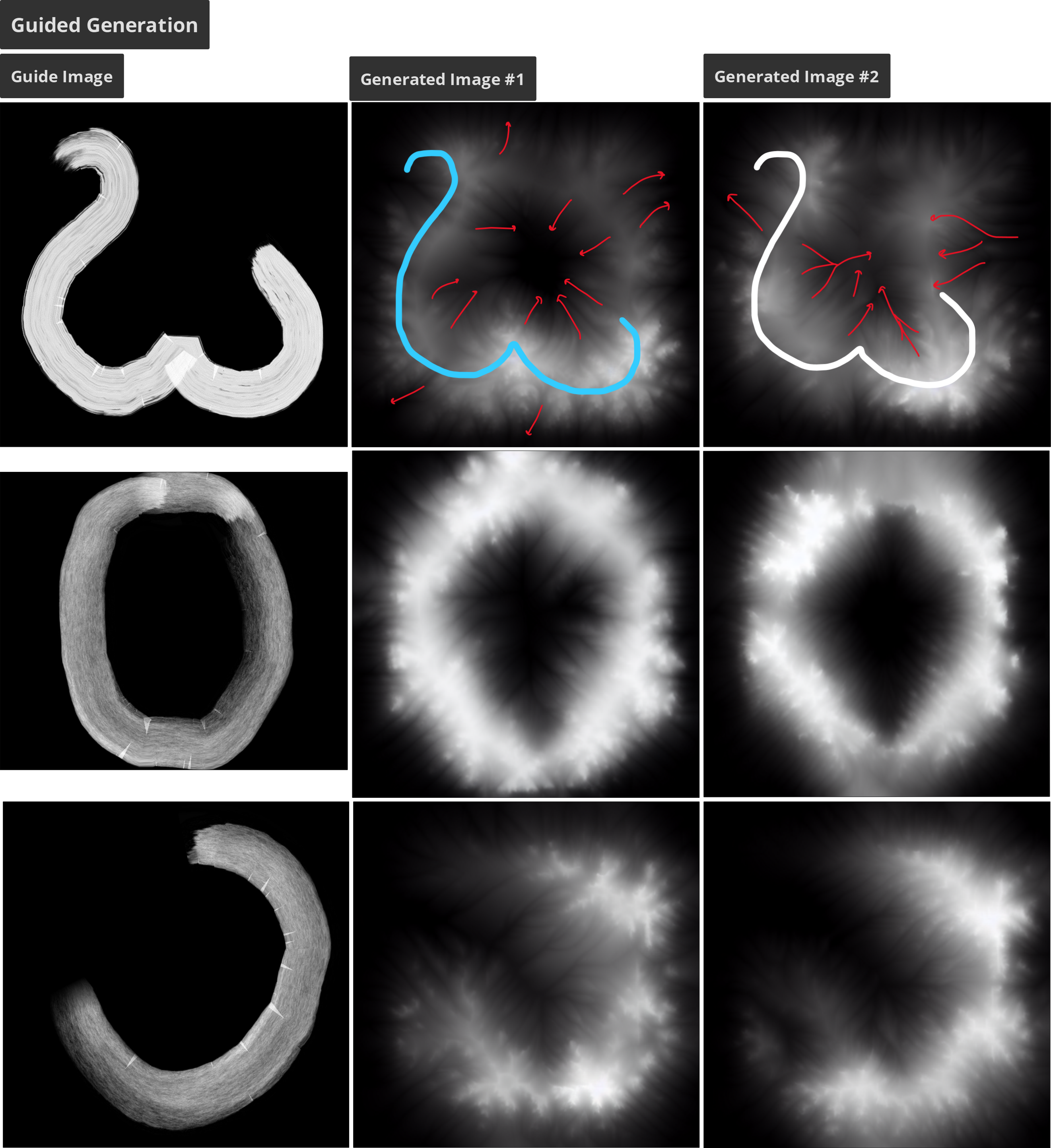

This project investigates the use of latent diffusion models for procedural terrain synthesis, with a focus on generating high-quality heightmaps that can be directly converted into large-scale 3D landscapes. The primary objective was to replace parameter-heavy, simulation-driven terrain workflows with a data-driven, example-based system that remains fast, controllable, and reproducible.

Technical Motivation

Traditional procedural terrain pipelines rely on fractals, erosion solvers, and node-based graphs. While physically motivated, these approaches suffer from:

- High iteration cost

- Complex parameter spaces

- Limited scalability for large terrains

Recent GAN-based solutions improve automation but introduce instability, tiling artifacts, and large dataset requirements. This project explores Stable Diffusion fine-tuned for heightmap generation as a more scalable alternative, leveraging latent space efficiency and transfer learning to achieve higher fidelity with significantly lower training cost.

System Architecture

The system is designed as a modular, tile-based generation pipeline that converts noise into heightmaps and then into real-time 3D terrain.

flowchart TD A[DEM Dataset] --> B[Preprocessing] B --> C[Stable Diffusion + LoRA] C --> D[Heightmap Tiles] D --> E[Terrain Assembly] E --> F[3D Rendering Engine] style A fill:#4FC3F7,stroke:#2B7A9E,color:#000 style B fill:#4FC3F7,stroke:#2B7A9E,color:#000 style C fill:#4FC3F7,stroke:#2B7A9E,color:#000 style D fill:#4FC3F7,stroke:#2B7A9E,color:#000 style E fill:#4FC3F7,stroke:#2B7A9E,color:#000 style F fill:#4FC3F7,stroke:#2B7A9E,color:#000

Component Breakdown

1. Data & Representation Layer

Input Data

- Digital Elevation Models (DEMs) sourced from OpenTopography and curated datasets.

- Converted to single-channel heightmaps (grayscale), where luminance encodes elevation.

Why Heightmaps?

- GPU-friendly and memory efficient

- Engine-agnostic (Unreal, Unity, Godot, custom engines)

- Direct mapping to vertex displacement

Heightmaps serve as the system’s canonical representation, decoupling generation from rendering.

2. Diffusion Model Architecture

The core generator is a latent diffusion model (Stable Diffusion) composed of:

flowchart TD A[Image / Noise] --> B[VAE Encoder] B --> C[Latent Space] C --> D[U-Net & Cross Attenuation] D --> E[VAE Decoder] E --> F[Heightmap Output] style A fill:#4FC3F7,stroke:#2B7A9E,color:#000 style B fill:#4FC3F7,stroke:#2B7A9E,color:#000 style C fill:#4FC3F7,stroke:#2B7A9E,color:#000 style D fill:#4FC3F7,stroke:#2B7A9E,color:#000 style E fill:#4FC3F7,stroke:#2B7A9E,color:#000 style F fill:#4FC3F7,stroke:#2B7A9E,color:#000

- VAE (Variational Autoencoder) compresses heightmaps into a latent space, reducing compute cost.

- U-Net performs iterative denoising, learning terrain structure and spatial relationships.

- Cross-attention enables conditioning using text prompts or guiding images.

3. LoRA Fine-Tuning Strategy

Instead of retraining the full diffusion model:

- Base Stable Diffusion weights are frozen

- Low-Rank Adaptation (LoRA) layers are injected into cross-attention blocks

- Only rank-decomposed matrices are trained

Benefits

- Training possible with 20–100 samples

- Model sizes reduced to MBs instead of GBs

- Enables rapid experimentation on consumer GPUs

This makes the system practical for indie teams, research prototyping, and tool development.

4. Tile-Based Generation System

Large terrains are generated using overlapping tiles, enabling scalability without exhausting GPU memory.

flowchart TD A[Global Seed] --> B[Tile Coordinates] B --> C[Conditional Diffusion] C --> D[Seam-aware Heightmap Tiles] style A fill:#4FC3F7,stroke:#2B7A9E,color:#000 style B fill:#4FC3F7,stroke:#2B7A9E,color:#000 style C fill:#4FC3F7,stroke:#2B7A9E,color:#000 style D fill:#4FC3F7,stroke:#2B7A9E,color:#000

- Neighbor-aware conditioning reduces seams

- Deterministic seeds allow exact regeneration

- Individual tiles can be re-generated without invalidating the entire terrain

This mirrors how open-world games stream terrain data at runtime. Similar to World Partitions in Unreal Engine 5.

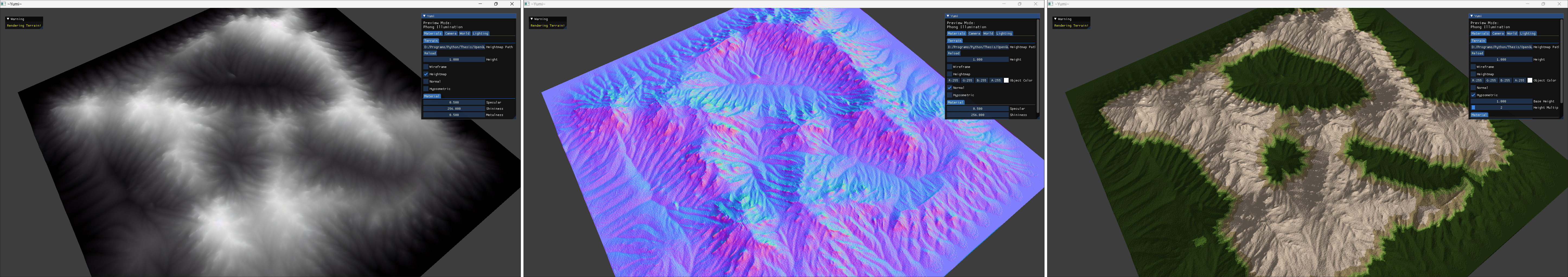

5. Terrain Reconstruction & Rendering

Generated heightmaps are converted into 3D terrain via:

- High-density mesh tessellation

- Vertex displacement from height values

- Procedural normal generation

- UV mapping and shading

A custom lightweight renderer was implemented to:

- Minimize iteration time

- Maintain control over mesh density and precision

- Visualize results without full engine overhead

Why?

This system enables scalable world generation by allowing large terrains to be prototyped and regenerated incrementally, with deterministic results suitable for production pipelines. AI is used as a front-loaded generator that artists guide through prompts, masks, and seeds, ensuring creative control rather than automation. By operating on heightmaps, the workflow integrates directly with existing engines and tools, while remaining extensible to related data such as material maps, foliage density, and biome masks. Positioning diffusion-based PCG as a general-purpose foundation for world building.

Key Takeaways

- Latent diffusion models outperform GANs for procedural terrain synthesis

- Example-based learning removes the need for explicit geological simulation

- Tile-aware diffusion enables scalable open-world generation

- AI-assisted PCG works best as a creative accelerator, not a black box

Below are some generated results:

TL;DR

Built a diffusion-based procedural terrain system that generates production-ready heightmaps in seconds using Stable Diffusion fine-tuned with LoRA. The pipeline scales to large worlds via tile-based generation, produces deterministic results for iteration and version control, and integrates directly with existing game engines through standard heightmap workflows. Designed as an artist-guided tool, not a black box, enabling rapid prototyping without sacrificing control.

Have any more questions? Find me on Twitter or shoot me an email :)